Tabular data

Here are overall classification problem results across state-of-the-art AutoML frameworks using AutoMlBenchmark test suite:

| framework | AutoGluon | FEDOT | H2O | LAMA | |

|---|---|---|---|---|---|

| Dataset name | Metric name | ||||

| APSFailure | auc | 0.990 | 0.991 | 0.992 | 0.992 |

| Amazon_employee_access | auc | 0.857 | 0.865 | 0.873 | 0.879 |

| Australian | auc | 0.940 | 0.939 | 0.938 | 0.945 |

| Covertype | neg_logloss | -0.071 | -0.117 | -0.265 | nan |

| Fashion-MNIST | neg_logloss | -0.329 | -0.373 | -0.380 | -0.248 |

| Jannis | neg_logloss | -0.728 | -0.737 | -0.691 | -0.664 |

| KDDCup09_appetency | auc | 0.804 | 0.822 | 0.829 | 0.850 |

| MiniBooNE | auc | 0.982 | 0.981 | nan | 0.988 |

| Shuttle | neg_logloss | -0.001 | -0.001 | -0.000 | -0.001 |

| Volkert | neg_logloss | -0.917 | -1.097 | -0.976 | -0.806 |

| adult | auc | 0.910 | 0.925 | 0.931 | 0.932 |

| bank-marketing | auc | 0.931 | 0.935 | 0.939 | 0.940 |

| blood-transfusion | auc | 0.690 | 0.759 | 0.754 | 0.750 |

| car | neg_logloss | -0.117 | -0.011 | -0.003 | -0.002 |

| christine | auc | 0.804 | 0.812 | 0.815 | 0.830 |

| cnae-9 | neg_logloss | -0.332 | -0.211 | -0.262 | -0.156 |

| connect-4 | neg_logloss | -0.502 | -0.456 | -0.338 | -0.337 |

| credit-g | auc | 0.795 | 0.778 | 0.798 | 0.796 |

| dilbert | neg_logloss | -0.148 | -0.159 | -0.103 | -0.033 |

| fabert | neg_logloss | -0.788 | -0.895 | -0.792 | -0.766 |

| guillermo | auc | 0.900 | 0.891 | nan | 0.926 |

| jasmine | auc | 0.883 | 0.888 | 0.888 | 0.880 |

| jungle chess | neg_logloss | -0.431 | -0.193 | -0.240 | -0.149 |

| kc1 | auc | 0.822 | 0.843 | nan | 0.831 |

| kr-vs-kp | auc | 0.999 | 1.000 | 1.000 | 1.000 |

| mfeat-factors | neg_logloss | -0.161 | -0.094 | -0.093 | -0.082 |

| nomao | auc | 0.995 | 0.994 | 0.996 | 0.997 |

| numerai28_6 | auc | 0.517 | 0.529 | 0.531 | 0.531 |

| phoneme | auc | 0.965 | 0.965 | 0.968 | 0.965 |

| segment | neg_logloss | -0.094 | -0.062 | -0.060 | -0.061 |

| sylvine | auc | 0.985 | 0.988 | 0.989 | 0.988 |

| vehicle | neg_logloss | -0.515 | -0.354 | -0.331 | -0.404 |

The results are obtained using sever based on Xeon Cascadelake (2900MHz) with 12 cores and 24GB memory for experiments with the local infrastructure. 1h8c configuration was used for AMLB.

Despite the obtained metrics being a bit different from AMLB’s paper the results confirm that FEDOT is competitive with SOTA solutions.

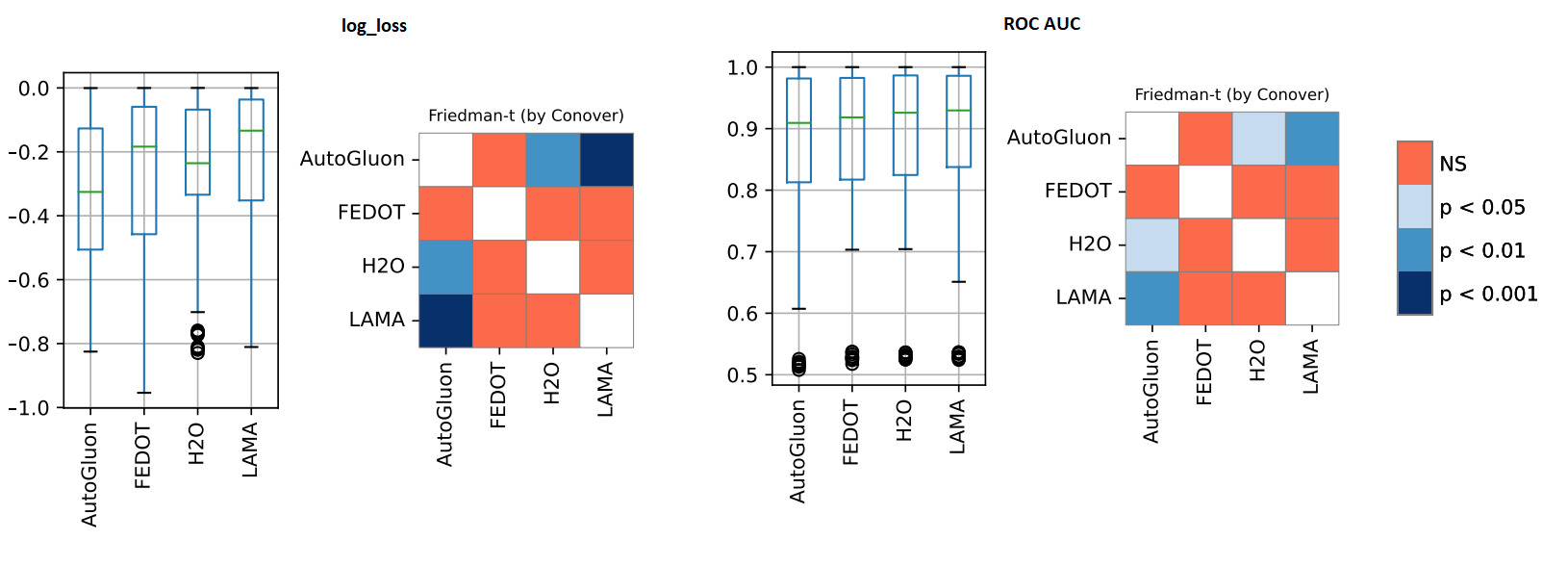

The statistical analysis was conducted using the Friedman t-test. The results of experiments and analysis confirm that FEDOT results are statistically indistinguishable from SOTA competitors H2O, AutoGluon and LAMA (see below).